<onWebFocus />

Knowledge is only real when shared.

Why You Shouldn't Dismiss Serverless

May 2022

Use Serverless infrastructure to your advantage.

The term Serverless can be very confusing as it suggests the absence of a server. As we will soon find out this isn't the case at all. Applications that aren't hosted in a Serverless way always have an instance of a server running. For periods of inactivity the server might sleep in order to save costs. On the other hand for periods of high activity additional server instances might be spun up. Serverless is different as nothing is running in between requests. Apart from the data required for rendering client-side React applications mostly serve static assets and load their data as JSON from a small API server. Server-side React frameworks like gatsby and next prerender pages whenever possible so that they can also be served as static assets. Serving the dynamic data required for rendering on the client usually requires something like an express server. Creating such a server is usually very simple. Different data is grouped under different URLs and usually returns or modifies data from a database. The problem with this setup is the high cost associated with always running a server.

What is Serverless?

Serverless isn't defined by the absence of a server but by the absence of a continuously running server. Keeping a server running requires a lot of memory and a dedicated CPU that's constantly running, whether or not anybody is using the website. As most developers know there are many websites out there that don't have many users but should still be reachable anytime without much delay. Serverless is solving exactly this hosting problem. Providers like Vercel are able to offer very cheap hosting compared to traditional services. Due to leveraging Serverless they are even able to offer a generous free tier to developers until a certain traffic limit is reached.

How does Serverless reduce the hosting cost so much? In a Serverless environment whenever there is a request a server to handle the approprite request will be started. When the request is finished the server will be stopped again. While under high load the server might just keep running and handle many requests this isn't guaranteed and up to the host provider.

What other benefits does this approach come with? Since Serverless assumes the absence of a continuously running server the application will automatically scale out of the box. Scaling and concurrency will be taken care of by the hosting provider which can easily boot up additional instances when there are many requests.

What are the drawbacks? When programming for Serverless the existence of a continuously running server cannot be assumed. Just like in parallel programming with threads one cannot assume requests will be processed in the order they arrive. In addition to that no state can be held in memory between requests so anything has to be persisted to a database. Currently, databases are often hosted separate from the Serverless infrastructure which adds significant delay.

Definitions

Serverless infrastructure will start server instances only on demand. Between requests or for parallel requests the server may or may not share the instance along with it's state. Only a few frameworks have access to such instances using a custom Node.js runtime.

Serverless Function exports a handler for a specific route to handle a request. In order to handle the request the function will receive the request and response object from the default Node.js http server and it's not possible to access any ports or start up your own server. Such functions will never share any state and are usually limited in the amount of memory, time and disk space they can use.

An Edge Function is a dynamic function that will be executed on the CDN. Compared to Serverless Functions they must use significantly less disk space, time and memory. Instead of using the Node.js runtime they run on the V8 JavaScript engine which is significantly faster but has some limitations. Edge Functions should not access any dynamic state from a database. That's because a database can only reside in a single location. Edge Functions can also be invoked as a middleware for regular Serverless Functions.

Prerender Functions render assets which can be cached on the CDN. During the render they also define when the cached assets generated should expire and the function is to be retriggered. They are the same as Serverless functions except that they define a cache header which allows further requests to be served from the CDN on the edge.

The official Wikipedia definition of Serverless computing can be confusing. It's mixing two unrelated terms: Server and computation. According to it's definition Serverless means that the computation instances (hardware) are managed for you. Whereas in our and the more popular definition such providers will also manage the instantiation of the server code (software) for you. The computation approach inspects the CPU load of the server and adds additional instances when necessary. The newer server based approach scales based on the amount of requests.

In our definition the same server instance can be shared between customers while the Wikipedia definition would only allow for the compute instance to be shared. Each switch between customers would require the customer specific server to be booted up. This is for example the case on Heroku where the basic unit is called a container instead of a function. If demand is high Heroku will automatically deploy another container. If serveral containers are running they may not share the same state similar to Serverless functions. Since both services are managed you only pay for the time where you actively use the service. However, on Heroku this also includes the time required to start up the container which includes the server software while on Vercel the server software is managed and you only pay for the time your functions take to process a request. Since Heroku has no access to the server software but only to the server hardware it's not aware of the request lifecycle. Instead it will go to sleep after no new incoming requests for a period of 30 mintues where the container is idle. This results in a single request using 30 mintues in the worst case while on Vercel the same request would never exceed the 5 seconds required to process the request. On Heroku computation can continue even after a response to the initial request has been sent.

JAMStack

Although the use of this term to describe a certain architecture has recently died down it's still worth discussing. While JAMStack is less flexible than todays Serverless approaches, it goes in the same direction and started prerendering on the server using a client-side framework. It describes an approach where a website is fully rendered during the build and then distributed as static assets from a CDN. Most websites and blogs don't really require a lot of interactive parts and so this approach has worked well. Through a process called hydration the markup will render again on the client allowing for interactivity. Interactivity usually requires dynamic data which can be accessed through an API. Since these APIs can usually be split into small and separate parts Serverless functions are perfectly suited to make it dynamic.

While next can also dynamically render React during the request it will prerender any pages to markup that don't require any dynamic data. Therefore, next is perfectly capable of implementing the JAMStack.

The Whole Thing Sounds Familiar? Well, It Is.

When Serverless functions plus file system based routing is used by a framework like next it is actually the same paradigm PHP used back in the days. In PHP the code matching the file in the URL is executed on every request returning the markup. The Apache server that's being run by the hosting provider can handle dozens of different projects just as in a Serverless environment. This has allowed PHP to offer very cheap hosting. For websites PHP has been the most popular backend for a very long time with countless Wordpress websites out there still relying on it.

For JavaScript backend frameworks to gain ground comparable to PHP a Serverless approach is crucial.

Since JavaScript is already running in the Browser, also writing the backend in Node.js with JavaScript has gained popularity. Meanwhile, PHP has been losing steam for many years already. A cheap and flexible Serverless approach might just be what's needed for JavaScript to be what PHP was a decade ago.

Requests at the Speed of Light

Even though one might not notice, every browser request has travelled a very long distance through fiber cables. The information is sent through these cables in the form of photons or what we colloquially call light. Photons inside these cables travel at the speed of light, making these requests as fast as theoretically allowed.

A Google search made from Zürich to a Google datacenter in Mountain View, California has to travel first to Mountain View where the request is processed and then sent back to Zürich. This amounts to a minimal distance of 18'740 kilometers that have to be overcome. Under theoretically optimal circumstances, this still requires 63 milliseconds. Such a connection can also be described as having a ping of 63 ms. For many gamers playing highly interactive games that's quite slow and that's the reason why gaming servers are split up by regions as such far requests are simply too slow. Obviously, 63 milliseconds isn't the full story as in between there are various stations and processing to be done.

This is also an area where Serverless requires to make optimizations to provide users from all over the world with fast responses. Serverless frameworks are already built to allow for optimizations which were for the average developer unthinkable back in the days of PHP.

Content Delivery Network - The Edge 🌎

While a ping in range of 63 ms is much faster than what's needed for websites, this is an area where Vercel is optimizing with the newly introduced Edge Network. This network will handle anything that can be cached like static markup, images and code. For these requests responses will be very quick as the request will be routed to the nearest datacenter.

Requests involving Serverless Functions that cannot be cached or use getServerSideProps will still be routed to a central location and take longer.

Database

Unlike the previously popular LAMP stack for PHP (Linux, Apache, MySQL and PHP) which comes with a MySQL database built in, Serverless usually doesn't come with a database. There are many database services available that work well with any Serverless setup.

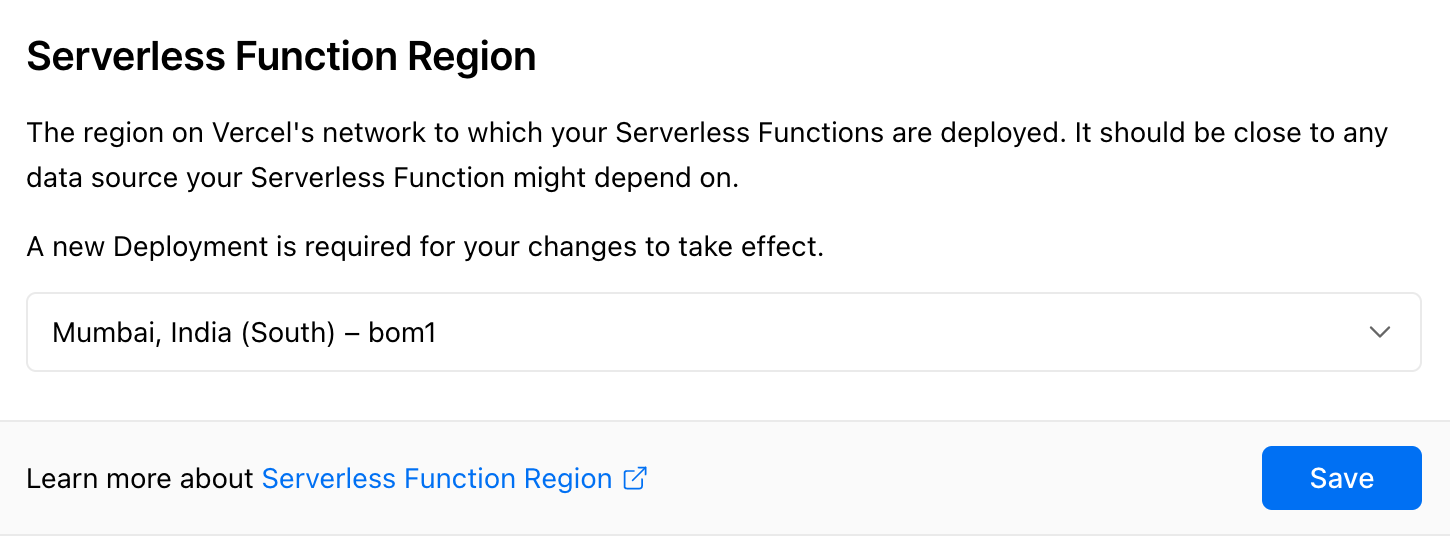

Since Serverless functions are stateless by default most of them will need access to a database. In order to provide consistent data the database cannot be distributed and only accessed by one request at a time. Most database services are connected through HTTP or TCP connections, where requests can add additional performance penalties if the database is far away from the Serverless function datacenter. Vercel allows you to set the location where your Serverless Functions are deployed so that they can be close to the location of the external database you choose. Planetscale a MySQL database service allows you to select the region during the creation of the database.

Choose the region where Serverless Functions should be deployed.

Just like a Serverless service a database requires a constantly running server. Without any effort services can easily and cheaply run many databases on the same server for many different customers. Many database providers offer free tiers to try them out and use for side projects.

When it comes to database providers there are many choices and no choice has yet become very popular. The choice very much depends on the context of your application. MySQL is still a good choice especially for developers already familiar with the syntax from using it with PHP. There are so called NoSQL databases which can store plain JavaScript objects and therefore make interacting with a database much more like working with regular JavaScript data. Since there is usually still a lot of new syntax to learn ORM tools like Prisma wrap a traditional database to provide easy and typed access from your code. Cloudflare a CDN provider that offers what they call Workers which are the same as Serverless Functions has recently announced to be adding a SQLite database to their workers. The choice of also providing a database seems obvious considering that almost every application requires some sort of state.

Serverless Hosting Providers

Websites follow a power law where a few are very popular, while most receive very few requests. Hosting providers have long realized that a free tier limited to low usage can attract many developers that will later be willing to pay large amounts once the project becomes a commercial success. Serverless providers are able to offer much cheaper plans and often a free tier to get started.

Vercel has already been mentioned and seems to be the most popular Serverless provider in the JavaScript ecosystem. The user experience is stellar and anybody can deploy their private projects for free. Once your project becomes commercial or is attracting significant traffic you will be asked to pay $20 a month. This isn't cheap but definitely competitive. As already mentioned Serverless isn't meant to be optimal for high traffic sites as it will scale well but at a considerable cost. Once a product is a commercial success this price will feel like peanuts in most cases. Vercel has presets for almost any Open Source JavaScript framework out there and the platform grew out of hosting their own increadibly popular Next.js React framework. As mentioned in the Open Source Funding post they are also sponsoring many Open Source developers to work full-time on various projects.

Providers that offer classic hosting running a stateful server like netlify or heroku also manage to offer quite low prices by setting the server into a sleep mode after a period of inactivity. While this works it requires more resources and the first request after hibernating can take very long. Heroku was recently suffering an incident involving GitHub integration that made it impossible to deploy from GitHub (which most people do) for almost two months. This might be an indicator that Salesforce is realizing the approach doesn't match their innovative culture anymore and is allocating fewer resources internally. Update In August 2022 Salesforce announced they will no longer offer free plans after November that year.

λ Serverless Functions

Basic Serverless Functions on Vercel are very simple. They export a handler that will receive information about the request and an object to add the response to. The request and response objects are very similar to the Node.js HTTP Server as well as express. When migrating an express server it's also possible to use your own express app. These functions can also interact with a database to provide user specific or up-to-date data.

Client-Side vs. Server-Side Rendering

In a CSR (Client-Side Rendering) application React is sent to the browser first, where once it's executed the markup will be rendered on the client. SSR (Server-Side Rendering) first prerenders the markup on the server and sends back the rendered markup that can be displayed instantly. The React code is also sent and will run just as in a CSR application in a process called hydration. SSR will display markup quicker than CSR but will take as long until the application becomes interactive. SSR requires some knowledge to use it properly although frameworks like next have made it almost as easy as writing regular React.

Better initial rendering performance is the main advantage of SSR. Once all the assets have arrived, rendering the page on the client usually doesn't take very long and regular users are unlikely to notice a difference between a CSR or a SSR application. Optimizing for initial rendering performance came into focus for developers as it's used by Chrome Lighthouse where SSR is almost necessary to achieve a good performance score. While a Serverless approach has made dynamic rendering on the server very accessible and cheap, it's still much more lucrative the client to render which is free and nowadays every smartphone is as fast as any server. PHP prerenders the markup on the server because a decade ago dynamic client-side rendering didn't exist.

The following comparison will take a look how CSR and SSR compare in case the server has to access data from an external database on the initial request. Doing this has been very common in the days of PHP where client-side rendering didn't yet exist.

The client-side approach will directly send a response containing an empty HTML file and linking to the code containing the React rendering logic. This response will arrive quickly as everything can be cached on the nearest CDN. The time it takes to load the React and MobX code from the server and the time to render it in the browser are similar with rendering usually being slightly faster. According to the Chrome lighthouse tool a loader is rendered after 0.2 seconds with the actual content being rendered through React and MobX after 0.5 seconds. As pictured below the api/data call is only triggered after the code has been loaded. While at this point the page is already fully interactive the time this call takes will be noticeable and should be indicated with a loader or something similar.

index.html

main.js

api/data

With the server-side approach things look different. When the user sends the request to the server the page won't be cached on the CDN because it's usinggetServerSideProps requiring every request to be processed by a Serverless function. So the request will first travel to the central location where also the Serverless Functions reside. There the page will be rendered by the server triggering a request to the database which usually takes some time. Once the data is available and the rendering is done, the prerendered markup will be sent back. Once it arrives in the browser the page is immediately shown in full to the user. Hydration which amounts to another render this time on the client, only requires the code to load from the CDN and execute in order for the page to become interactive. The code can be cached as the data passed as props from getServerSideProps will be injected as JSON into the initial document.

index.html

main.js

The following interactive demo let's you try out both approaches side-by-side and see if you can notice any differences from a user perspective. The simulated database delay on the server can be configured.

Delay in Milliseconds

Client-Side Rendered Page

Server-Side Rendered Page

The main thing to be taken away from this is that initial access to a database can slow down a SSR request to the point where it doesn't offer any advantages over a purely CSR approach. It even takes longer until something shows up on the page and React is ready in the browser. Apart from turning parts of the page into CSR this performance issue cannot be optimized away later and therefore constitutes an important architectural decision. On the other hand though, Next.js will automatically optimize switching to another route where additional database calls are necessary. In such cases nothing will be rendered on the server and only the data loaded mimicking the CSR approach.

Update Starting with Next.js version 13 the newly introduced experimental app directory allows to specify layouts and loading states. This results in partial markup being sent to the client before everything is fully rendered. This will not affect the overall performance of the above SSR example but improve the time to initial render.

SSR connected to dynamic data requires the server to render the page anew for every user on every initial request. Needless to say, this can somewhat defeat one of the goals of Serverless which is to provide cheap hosting. Todays devices will usually render faster than the server and all that power is available for free. There are some use cases like rendering a PDF or highlighting some code where a lot of unnecessary rendering code would have to be sent to the client while it's enough to just transfer the result of the render and not perform any hydration. Frameworks like Next.js are highly optimized and will preload the data and rendering logic necessary to render the page for any local link present so that possible future interactions will be instant achieving behaviour similar or even better than a CSR page. On a lot of websites using React much of the rendered markup will not be interactive in any way. This fact has been recognized by frameworks like astro and React Server Components which allow the developer to specify which React components need hydration.

Getting Started with Serverless

With some adaptations pretty much any traditional setup can be converted to be hosted on a Serverless service. Starting out with a Serverless template is much easier. The default choice is to use Next.js:

Next will use Server-Side Rendering out-of-the-box and Serverless functions can be placed in /pages/api.

In cases where client-side rendering is fine it's possible to deploy any create-react-app or vite application to a Serverless service. My own tool called papua offers a dedicated template that includes Serverless functions and can directly be deployed to Vercel. When developing locally the functions will be registered on the local webpack dev server.

It's also possible to host just an API on a Serverless environment. The following squak template allows you to build an API in TypeScript.

Switching to Serverless

Switching to Serverless can take a good amount of refactoring and cause considerable pain. The main task is to swich to using a persisted data source anywhere where there is data from memory accessed. Next, the API routes that can be handled as Serverless Functions should be moved into one such function. Lastly, all the setup specific to the previous architecture needs to be removed and replaced where necessary.

Building a Serverless Framework

In order for a CMS, shop or blog framework to be compatible with the Serverless infrastructure on Vercel the build needs to output Serverless Functions. The Build Output API specifies how the structure of these functions must look like. Much of it is about where to place static assets, rewriting routes and optimizing images. All of this has to be placed in a folder called .vercel/output. Vercel will then merge the output together and deploy it to the root. The following source file in the vercel repository lists all supported frameworks and how they integrate.

Anatomy of a Serverless Function

Imagine we are creating a CMS and the user has created a page that should be displayed on the /manual route. For a Serverless Function to show up as manual on the top-level we have to create the following folder .vercel/output/functions/manual.func.

Inside this folder we place a .vc-config.json file. The following three properties are required.

When it comes to the runtime you currently don't have many options as only Node.js is supported. More important is the handler that must be a JavaScript file which will export a handler that takes a request and returns a response. As in any Node.js runtime the Serverless Function can access the filesystem and import further JavaScript files required for execution.

A serverless Function cannot access other Serverless Functions but can share common code through static files.